How to Enhance AI Vocal Performance for Better Results

AI-generated vocals can sound clean and musically accurate, but that doesn’t always mean they feel real. The melody might be correct, and the rhythm perfectly aligned — yet something is missing. The voice doesn’t breathe, respond to the music, or carry emotion the way a human performance would.

This lack of nuance is what makes many AI vocals feel mechanical. Without variation in phrasing, subtle pitch movement, or dynamic control, the result can sound rigid or emotionally flat. These are not mistakes — they’re limitations of how vocal synthesis works today. But they can be overcome.

With a detailed editing process, it’s possible to shape a synthetic vocal into a performance that feels expressive, intentional, and musically alive. Many creators search for an AI vocal enhancer, hoping for quick fixes. But what actually makes vocals sound real isn’t automation — it’s control. That’s where ACE Studio makes a difference.

This guide will show you how to use its pitch shaping, timing adjustment, articulation control, and emotion editing tools to bring depth and realism to your AI vocal tracks. It’s not about making vocals perfect — it’s about making them believable.

What Makes a Vocal Performance Sound Natural

AI voices are designed to follow musical instructions with precision. They hit the right notes, stay in rhythm, and pronounce lyrics clearly. But precision isn’t the same as expression. Most AI-generated vocals sound flat, not because they’re wrong, but because they lack the imperfections and nuances that make a voice feel human.

Trained singers instinctively adjust their delivery: they stretch syllables for emphasis, breathe naturally between phrases, and shift their tone to reflect emotion. These tiny variations are what turn a sequence of notes into a performance.

AI-generated vocals typically don’t include this by default. Phrasing can feel rigid, consonants may be too short or too long, and transitions between notes are often abrupt. Even when the melody is technically correct, the performance lacks dynamic flow, breath, and texture. The result is a voice that feels emotionally distant, mechanical instead of musical.

But that can be changed. You can bring life into a flat AI vocal by shaping pitch curves, adjusting consonant timing, and refining emotional parameters like breathiness and tension. It’s not just about accuracy — it’s about phrasing, tone variation, and pacing. Real voices whisper, swell, pause, and push — and with the right tools, AI voices can do the same.

How ACE Studio Gives You Full Control vs. Typical AI Vocal Enhancers

Most AI vocal tools offer a surface-level fix — they correct pitch, smooth transitions, maybe adjust timing. But expression isn’t something you fix. It’s something you shape.

That’s why ACE Studio is designed not as an automatic enhancer, but as a precision instrument for vocal direction. It gives you control over the actual performance — how each note moves, how emotions shift, and how the phrasing connects with rhythm and tone.

Instead of relying on presets or one-size-fits-all effects, you work directly with the vocal — visually, musically, and in real time.

Pitch and Modulation Tools

ACE Studio gives you full manual control over pitch, allowing you to reshape the contour of each note directly. Using the pitch drawing tool, you can create custom pitch curves that override the AI-generated defaults. This is especially useful when transitions feel too abrupt or when sustained notes lack movement.

The modulation tool allows you to adjust the smoothness of pitch transitions, softening sharp pitch changes and making legato passages more fluid. For adding vibrato, the vibrato tool lets you define its depth and speed per note. If a change doesn’t work as intended, the pitch eraser instantly restores the original curve. This level of control makes it possible to refine a vocalist’s melodic line with precision, not just to correct, but to shape expression.

Emotional Parameter Control

Expressiveness in AI vocals is controlled through four core parameters: Air, Falsetto, Tension, and Energy. Each one influences how a note is performed, not just how it sounds. Increasing Air introduces breathiness, giving the vocal a softer, more intimate texture. Raising Energy adds vocal intensity and presence. Tension controls the physical strain in the vocal delivery — tightening or relaxing the feel — while Falsetto shifts the tone toward a lighter, head-voice timbre.

These adjustments are made per note, which gives you the flexibility to change the emotional profile across a phrase or even within a single word. One line can sound restrained and delicate, while the next carries more urgency and strength, all depending on how these values are balanced.

To see this in action, check out this demo video. It shows how subtle changes to emotion parameters can transform the mood of a vocal, from soft and gentle to bold and expressive. It’s a clear example of how performance shaping in ACE Studio goes beyond just “fixing” the vocal.

Keep in mind that each voice model responds to these parameters differently. What works well on one voice may need only minimal tweaking on another. That’s why it’s important to render, listen carefully, and apply changes gradually. Small refinements often produce more believable results than broad, aggressive edits.

Vowel and Consonant Timing Adjustment

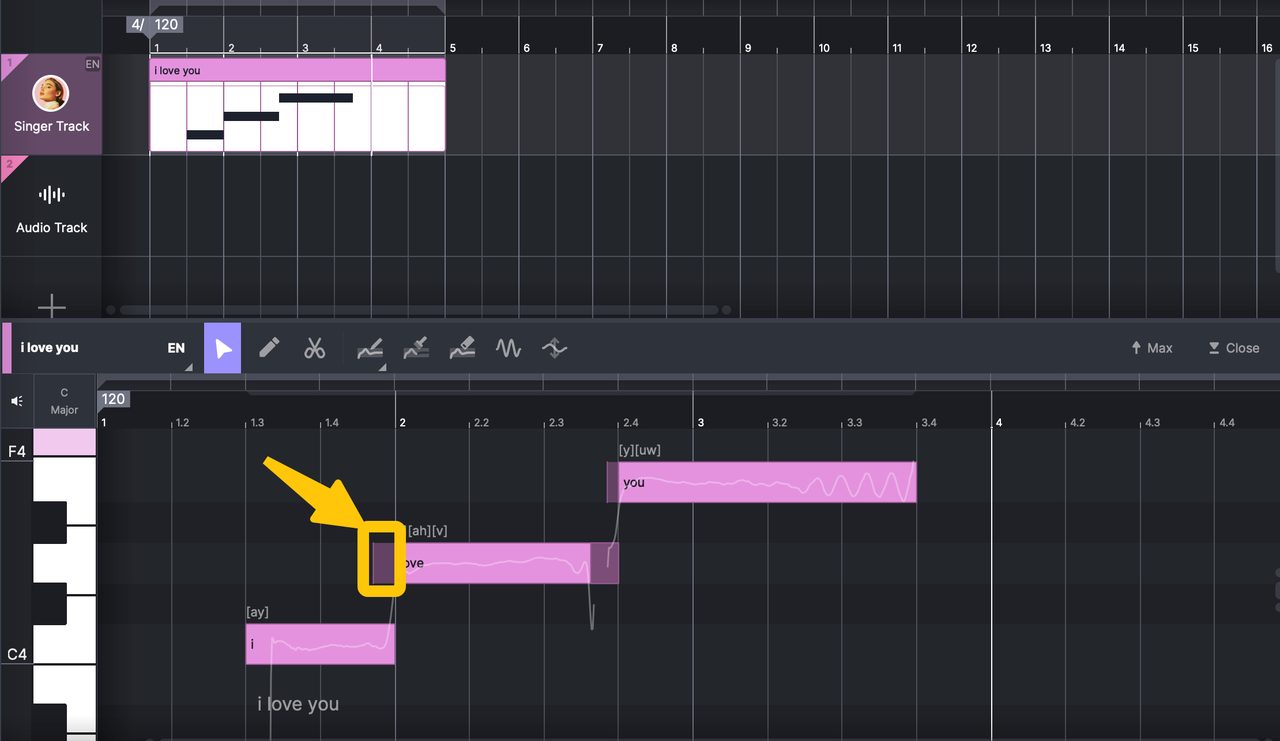

Each note in ACE Studio is visually divided into consonant and vowel zones. Consonants are shown as semi-transparent segments at the start or end of a note, while vowels are represented as solid-colored blocks. This separation allows you to control articulation and phrasing at a granular level.

By dragging the boundaries of consonant zones, you can change their length and positioning. If a consonant is too short, it may sound clipped or unclear; if it drags, it can throw off the rhythm. Adjusting these zones helps align the vocal more tightly with the instrumental and improves intelligibility, especially in rhythmically dense or fast-paced sections. These edits are subtle but critical in creating a natural vocal flow.

Real-Time Playback with Visual Editing

Every adjustment in ACE Studio — from pitch changes to emotional tweaks and timing edits — is reflected immediately in playback. This real-time feedback is essential for efficient vocal editing. You’re not making blind changes or waiting for renders; you hear the effect as you go.

The interface combines a timeline, waveform, and parameter overlays in a single view. This unified editing environment helps you stay focused and precise. You can work on a full vocal arrangement or fine-tune a single phrase with equal clarity. The blend of visual structure and instant audio response gives you the control needed to shape AI vocals with musical intent.

Step-By-Step: Enhancing AI Vocals In Ace Studio

Refining AI vocals is a technical process, but it should feel like you’re directing a performance, not just editing data. With ACE Studio, each part of the vocal can be shaped intentionally, from pitch movement to articulation and emotional tone. The following steps walk you through a practical workflow, from first import to final export.

Step 1: Import and render the AI vocal

Begin by loading your AI-generated vocal into ACE Studio. The software will render the vocal line and automatically segment it into editable notes. Each note appears with a pitch curve, modulation markers, and clearly defined consonant and vowel regions. This initial render is your raw performance — treat it like a vocal take that’s ready for direction, not just correction.

Step 2: Listen critically and identify weak areas

Before making any edits, play through the entire vocal and note which parts sound too flat, too stiff, or emotionally disconnected. Listen for unnatural transitions between notes, inconsistent phrasing, or a lack of variation in dynamics. This first pass is essential — your ear will tell you what needs attention long before the timeline does.

Step 3: Refine the pitch curves and transitions

Open the pitch editor and begin by reshaping any notes that sound overly linear or abrupt. Use the pitch drawing tool to manually smooth transitions or add gentle movement. For long, sustained notes that feel robotic, apply vibrato by clicking and dragging in the vibrato layer. You can also use the modulation tool to soften any jagged pitch transitions. If something feels off after editing, the pitch eraser lets you reset a specific note to its original curve.

Step 4: Adjust consonant timing and phrasing

Next, focus on articulation. Zoom into areas where phrasing feels rushed or slightly off-beat. In ACE Studio, consonants are visually separated from vowels. Dragging the boundaries of these zones changes how early or late a consonant is pronounced, and how long it lasts. These timing edits are subtle, but they have a major impact on rhythmic precision and vocal clarity, especially in faster passages or dense lyric sections.

Step 5: Shape the emotional tone using parameters

Highlight the notes or phrases that feel emotionally flat or mismatched with the mood of the music. In the Emotion Panel, adjust parameters like Air to introduce breathiness, Falsetto for a lighter tone, Tension to control vocal strain, and Energy to boost overall intensity. These values can be adjusted per note, which allows you to build contrast across verses and choruses. Always listen after each change — parameter sensitivity varies by voice model, and context is everything.

Step 6: Playback, refine, and export

Once your edits are in place, play through the full vocal and listen for consistency and realism. Check that transitions are smooth, energy levels feel intentional, and articulation matches the rhythm of your track. Make any final tweaks, then export the vocal as audio, or continue working with it inside your DAW. If needed, keep a saved version with edit history so you can return to specific points in the performance later.

How to Make AI Vocals Sound Less Synthetic

Once you've taken care of the technical details — pitch, timing, emotion, and articulation — the next step is shaping how the vocal feels. This is where many creators begin searching for an AI vocal enhancer to make the voice sound more natural or expressive with minimal effort. But in most cases, realism doesn’t come from automation. It comes from subtle, deliberate adjustments made with musical intention.

Instead of relying on one-click tools that apply the same preset across an entire performance, ACE Studio gives you control at the note level. You decide where the vocal needs more breath, where the consonants need tighter alignment, and how the phrasing should rise or fall with the rhythm. That flexibility gives you far more control over the outcome than most AI vocal enhancers can offer.

Start by thinking musically. What is this vocal trying to say? Where should it relax? Where should it build tension? These questions help guide phrasing and energy flow. Use small, targeted changes — particularly with emotion parameters — to bring life to the line. A bit more breath here, a touch less tension there, and suddenly the voice feels intentional, not mechanical.

Pay close attention to timing. Consonant placement isn’t just about pronunciation — it shapes groove. If a phrase feels off, it’s often a timing issue more than pitch. Fixing articulation can restore rhythm and clarity in a way that’s hard to achieve with enhancer-style automation.

Finally, always step back and listen in context. What you’re doing with ACE Studio is more than editing — it’s directing a performance. And when used with intention, it becomes more effective than any standalone AI vocal enhancement tool, because it gives you full creative control over the result.

Limitations And Opportunities In Today’s AI Vocal Workflow

AI-generated vocals have made remarkable progress in tone accuracy, pronunciation, and synthesis quality. But when it comes to performance nuance, there’s still a significant gap between generation and production-ready vocals. Most voice models are optimized for basic musicality — correct pitch, tempo alignment, and intelligibility — but they rarely produce natural phrasing or convincing emotional variation out of the box.

This is where tools like ACE Studio become essential. While the AI provides the raw material, the refinement stage still depends heavily on human input. No model yet understands the artistic context of a vocal line — whether it should rise with tension or relax into a breathy whisper. That level of decision-making still belongs to the creator.

Another challenge is consistency. Some voice models behave unpredictably when emotion parameters are pushed too far. Others may interpret the same note differently depending on context. These variances can’t be solved with automation alone — they require careful listening, detailed adjustments, and sometimes creative problem-solving.

But within those limitations, there’s also opportunity. Having manual control means you're not boxed in by default behaviors. You can shape a vocal performance with more flexibility than even some real recordings allow — adjusting emotion note by note, shifting timing microscopically, layering expression where the original take fell short.

In other words, the workflow isn’t perfect, but it’s powerful. It asks more of the user, but also gives more in return. ACE Studio bridges the gap between AI generation and artistic intention by putting the tools in your hands, where nuance, emotion, and identity in the performance are still best created.

Realistic AI Vocals Come from Creative Control

AI vocal generation has opened new creative doors, but expressive performance still requires human direction. Tools like ACE Studio don’t just improve technical accuracy — they give you the ability to shape how a vocal feels, not just how it sounds.

Where generic AI vocal enhancers apply the same filter across every phrase, ACE Studio gives you control over pitch, timing, emotion, and articulation — the building blocks of musical expression. That’s what transforms a synthetic line into a performance that resonates.

The technology will continue to advance. But until AI can understand artistic intent, it’s up to creators to close that gap. With the right tools and a trained ear, AI voices can go beyond just following notes — they can carry meaning. And that’s where your direction makes all the difference.

If you're ready to move past automation and start shaping performances with real intent, ACE Studio is the place to start.

FAQ

Is ACE Studio an AI Vocal Enhancer?

Not in the one-click, automatic sense. ACE Studio doesn’t apply prebuilt effects — instead, it gives you full manual control over pitch, timing, emotion, and articulation. If you’re looking for an AI vocal enhancer that lets you actually direct the performance, ACE Studio is likely what you’re looking for — even if it doesn’t carry that label.

Can I use ACE Studio with other music production software?

Yes. ACE Studio is designed to integrate smoothly into existing workflows. You can export edited vocals as standard audio files and import them into any DAW (Digital Audio Workstation) for mixing and mastering. This makes it easy to use ACE Studio as a focused vocal editor alongside your broader production setup.

How is ACE Studio different from one-click AI vocal enhancers?

One-click tools apply generic enhancements with little user control, which can be useful for speed but often lack musical nuance. ACE Studio gives you deep, per-note editing capabilities. Instead of applying preset effects, you direct the performance, note by note, phrase by phrase — making it far more suitable for professional, expressive results.

Do emotional parameters work the same across all AI voices?

Not exactly. Each voice model in ACE Studio responds to emotion parameters in slightly different ways. One voice may react strongly to increased Energy, while another may need only subtle adjustments to achieve the same effect. That’s why it’s important to preview, experiment, and make incremental changes based on listening, not just visual feedback.

Can I apply different emotional settings to different parts of the same vocal?

Yes. In ACE Studio, emotion parameters can be adjusted on a per-note basis. This means you can design contrast within a single performance — soft and airy in one line, then powerful and energetic in the next. It’s especially effective for creating tension and release within a song structure.

What’s the best way to create breath realism in AI vocals?

Since AI vocals don’t breathe automatically, you simulate breathing through phrasing and timing. This can be done by shortening a final vowel, leaving a brief pause between notes, or lowering the Energy and Tension on a specific phrase to create a natural drop-off. Realism comes from shaping space, not just sound.

Is ACE Studio suitable for beginners?

While ACE Studio offers advanced control, its interface is visually intuitive and built around musical workflows. Beginners can start with basic pitch and emotion edits and grow into more complex adjustments. The real-time feedback makes it easy to learn by doing, especially for users with musical instincts.

Can I combine multiple AI voices in one project?

Yes. You can load and edit multiple vocal tracks using different AI voices. This is useful for harmonies, backing vocals, or character-based layering. Just keep in mind that each voice may respond differently to the same parameter settings, so balance adjustments accordingly.

Does ACE Studio support multilingual vocals?

Currently, ACE Studio supports AI voices in multiple languages, depending on the voice model. Some models are optimized for English, while others handle Japanese, Spanish, or Mandarin. Always check the documentation for each voice model to understand its language compatibility and pronunciation behavior.